The competition

This Kaggle involves identifying landmarks from photos. There are many possible landmarks, about 80k of them, and many of them are not very, very well-known, or have very distinctive architectural or geological features.

The model should be able to take an image as input and output a landmark id and the associated confidence. The landmark id indicates which landmark the model thinks is in the image, such as Alamodome, Manitoga, …, etc., and the confidence is a number that measures how confident the model is in its prediction. So, each model prediction consists of the tuple (landmark id, confidence).

Global average precision

Kaggle measures the model's performance by using the global average precision (GAP) metric. It's calculated from on a list of predictions that has been sorted by the prediction confidence.

Consider the following 3 example cases (TABLE 1), where, in each case, the correctness of 6 predictions are listed in descending order of prediction confidence.

| case 1 | case 2 | case 3 |

|---|---|---|

| o | x | o |

| o | x | x |

| o | x | o |

| x | o | x |

| x | o | o |

| x | o | x |

In calculating the GAP, we go through the list of predictions, keeping track of the number of predictions that we have gone through and the number of correct predictions encountered. Using these, at any given prediction down the list, the current precision can be calculated as:

$$ \frac{\text{Number of correct predictions so far}}{\text{Number of predictions so far}} $$

Having gone through the whole list, we sum together all the current precisions, though we leave out those where the prediction is wrong. For case 1, this is:

$$ 1 \cdot \frac{1}{1} + 1 \cdot \frac{2}{2} + 1 \cdot \frac{3}{3} + 0 \cdot \frac{3}{4} + 0 \cdot \frac{3}{5} + 0 \cdot \frac{3}{6} = 3 $$ This sum is proportional to the GAP. Repeating the same procedure for case 2 and 3, we have:

Case 2 $$ 0 \cdot \frac{0}{1} + 0 \cdot \frac{0}{2} + 0 \cdot \frac{0}{3} + 1 \cdot \frac{1}{4} + 1 \cdot \frac{2}{5} + 1 \cdot \frac{3}{6} = \frac{33}{20} = 1.65 $$

Case 3 $$ 1 \cdot \frac{1}{1} + 0 \cdot \frac{1}{2} + 1 \cdot \frac{2}{3} + 0 \cdot \frac{2}{4} + 1 \cdot \frac{3}{5} + 0 \cdot \frac{3}{6} = \frac{34}{15} = 2.26 $$

Even though all 3 cases have the same number of correct predictions out of 6, because of where they appear in the list, the calculated GAPs are different. For case 1, all the correct predictions come at the start of the list, while for case 2 they come near the end.

In general, having higher confidence for correct predictions and lower confidence for incorrect predictions will result in a higher GAP. Conversely, having higher confidence for incorrect predictions and lower confidence for correct predictions will result in a lower GAP. Therefore, the order of the predictions naturally gives a weight to each of them.

To get the actual GAP, the above scores need to be divided by the number of predictions where the ground truth label is actually a landmark. So, if all 6 images are meant to be landmarks, then the GAP for cases 1, 2, and 3 are 0.5, 0.27, and 0.38, respectively.

Data

| Dataset name | #images | #(unique landmarks) | #(non-landmark images) |

|---|---|---|---|

| Public Train | 1,580,470 | 81,313 | 0 |

| Public Test | 10,345 | \(C_{public}\) | > 0 |

| Private Train | ~100,000 | \(C_{private}\) | 0 |

| Private Test | ~21,000 | \(C_{private}\) | > 0 |

The training sets do not have any non-landmark images, while the test sets have unknown numbers of them.

The unique landmarks in Public Train cover all that will be encountered in all other datasets. The number of unique landmarks in the test sets are unknown. But this number is the same for both Private Train and Private Test. In fact, they share the same unique landmarks by competition design.

Team PD's solution

Model training

The model is trained on the Public Train set. The head (or final layer) outputs 81313 logits, one for each of the possible landmark ids. The logits are then fed to the ArcFace loss function. The model parameters are optimised by minimising this loss.

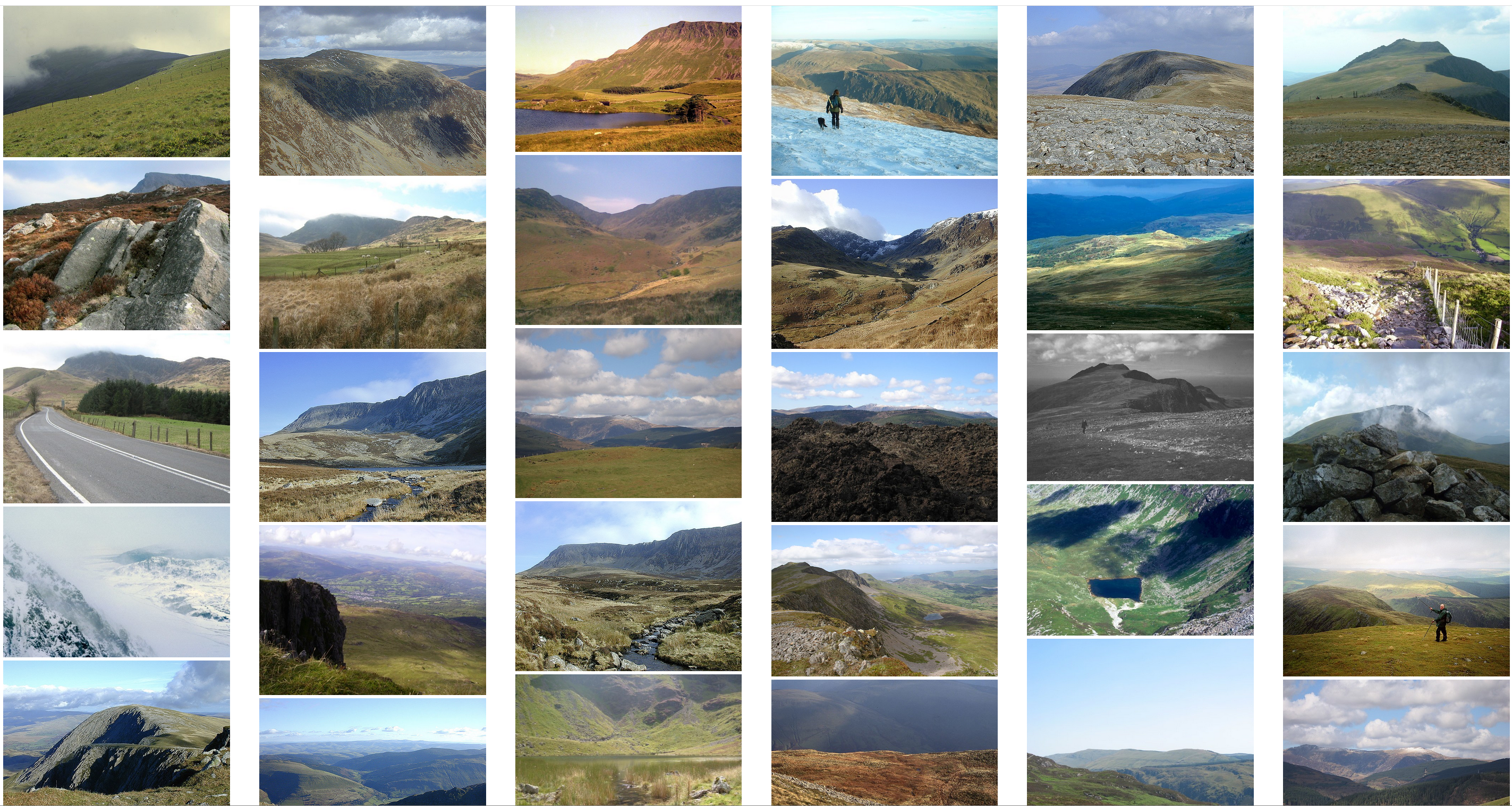

ArcFace is particularly suited for situations where there is a lot of intra-class variability, which is definitely the case here. For example, in FIGURE 1, it can be seen that when the landmark is a mountain, there are many angles and locations from which a photo can be taken and still be labeled with the same landmark id.

Note that the training here deals only with landmarks. There are 81313 landmarks in Public Train, and there are the same number of output logits. There is yet nothing that involves non-landmarks.

Validation data

To monitor training progress, the GAP is calculated at the end of each epoch on a validation set. It's crucial here to choose a validation set that contains non-landmarks because the test sets have non-landmarks.

The test set from the same competition a year before is well suited for this purpose, since it was released with the labels after the competition had ended. It shares some landmarks with Public Train whilst also having lots of non-landmarks, up to ~98%. So, it's used as the validation set. Let's call this set Valid.

Making predictions for validation using softmax

There are two ways of making the predictions using the trained model.

The first is to pass the model's output logits through a softmax layer and pick the landmark with the largest softmax as the predicted landmark and the softmax value as the associated prediction confidence.

Since there are exactly as many logits as there are unique landmarks, whatever it predicts, the model can only predict something that is a landmark, which means that if the Valid image is a non-landmark, the model is bound to be incorrect. It's therefore desirable for such predictions to come with a low prediction confidence, so that it doesn't bring down the GAP.

Making predictions for validation using recognition-by-retrieval

Another way to make a prediction on an image is to find another image that's similar to it and already labeled and use that label as the prediction.

To do this, there needs to be another labeled dataset that shares the same landmarks as Valid. In this case, since we know what landmarks there are in Valid, we can extract the subset from Public Train that shares the same landmarks with Valid for this purpose. Let's call this set Train, to be consistent with Team DP's paper, but don't confuse it with Public Train and Private Train.

| dataset name | #(images) | #(class ids) | #(non-landmark images) |

|---|---|---|---|

| Public Train | 1,580,470 | 81313 | 0 |

| Train | 41,207 | 647 | 0 |

| Valid | 117,172 | 648 | 114,828 (98%) |

In terms of the number of classes, the sole difference between Train and Valid is that Valid has an additional class for non-landmarks.

In the output activation from the second last layer of the model, each image is represented by a vector of length 512 (or, a list of 512 numbers) called the embedding. This representation is obtained for all the images in Valid and Train. Then, the cosine simlarity between all pairs of Valid and Train image is calculated. For any two images, their cosine similarity is the dot product of their embeddings.

For each Valid image, look up the Train image that has the largest cosine similarity and use its label as the prediction and the cosine similarity as the prediction confidence. The resulting predictions for Valid can then be used to calculate the GAP.

Similar to predicting using softmax, because Train only contains landmarks, the most similar image to a Valid image can only ever be that of a landmark, so if the Valid image is of a non-landmark, the prediction is incorrect and will bring down the GAP.

It's found that the GAPs obtained using softmax and image retrieval both track the public leaderboard (i.e. Public Test) well, so can be used for checkpointing during training.

Handling non-landmark test images

Further improvements on the GAP can be made with the use of an additional dataset containing only non-landmarks and a model ensemble. This post-process is described as re-ranking and blending. Let's call the non-landmark-only dataset Non-landmark.

For validation during training, Non-landmark can simply be the non-landmark images from Valid.

Here's how Non-landmark is used. For each image in Train, its cosine similarity with all the images in Non-landmark is computed. The mean of the top-5 similarity scores is then taken to be a measure of how similar the Train image is to a 'non-landmark'. Having done this for all Train images, how similar each of them is to a 'non-landmark' image is known.

Then, to make a prediction on a Valid image by image retrieval, as before, its cosine simlarity with Train is computed. However, this time, the similarity score with each Train image is further subtracted by the Train image's similarity to a 'non-landmark'. Why do this? Because, as pointed out above, whenever the image to be predicted on is a non-landmark, the prediction is always incorrect. In order to have a good GAP, it's important to place such a prediction far down the list of predictions, or, another way of saying this, it needs to have low prediction confidence. By subtracting the Train image's similarity to a 'non-landmark', the more similar the predicted landmark is to a 'non-landmark', the more the prediction confidence is reduced, and the less similar the predicted landmark is to a 'non-landmark', the less the prediction confidence is reduced.

Model ensembling

Suppose there are 3 models to be ensembled, the process just described above is repeated for all 3 models. This results in 3 similarity matrices, one for each model, between Valid and Train, penalised with Train's similarity with Non-landmark.

Then, only the top-3 similar Train images are kept in each similarity matrix. Gathering them together results in an overall similarity matrix that is \(N_{Valid} \times 9\), where \(N_{Valid}\) is the number of images in Valid. 9 because there are 3 models, and 3 most similar Train images are kept, so \(3 \times 3 = 9\). As an example, say there are 10 images in Valid, then this overall similarity matrix might look like this:

| Valid image id | model 1 | model 2 | model 3 |

|---|---|---|---|

| 0 | 0.8 0.6 0.55 | 0.7 0.68 0.6 | 0.9 0.85 0.5 |

| … | … | … | … |

| 9 | 0.9 0.87 0.4 | 0.85 0.6 0.5 | 0.97 0.92 0.5 |

In this example, according to model 3, the Train image that is most similar to Valid image 0 has a cosine similarity score of 0.9 with it, and the second most similar has a score of 0.85, and so on.

The labels of the Train images in this overall similarity matrix are looked up:

| Valid image id | model 1 | model 2 | model 3 |

|---|---|---|---|

| 0 | 17 6 3 | 17 3 6 | 17 3 8 |

| … | … | … | … |

| 9 | 22 4 9 | 4 22 9 | 22 9 4 |

For a given Valid image, the cosine similarity score is aggregated across all models for each unique Train label. For the example shown in TABLE 4 and 5, this is:

Valid image 0 $$ \begin{array}{lr} \text{landmark 3:} & 0.55 + 0.68 + 0.85 = 2.08 \\ \text{landmark 6:} & 0.6 + 0.6 = 1.2 \\ \text{landmark 8:} & 0.5 \\ \text{landmark 17:} & 0.8 + 0.7 + 0.9 = 2.4 \end{array} $$

Valid image 9 $$ \begin{array}{lr} \text{landmark 4:} & 0.87 + 0.85 + 0.5 = 2.21 \\ \text{landmark 9:} & 0.4 + 0.85 + 0.5 = 1.75 \\ \text{landmark 22:} & 0.9 + 0.6 + 0.97 = 2.47 \end{array} $$

The predicted landmark is the label with the largest total score and that score is the prediction confidence. In the example, this is (landmark 17, 2.4) for Valid image 0 and (landmark 22, 2.47) for Valid image 9.

It's found that this way of making an overall prediction from an ensemble improves the GAP.

What happens at test time?

The process of making predictions for obtaining a good GAP has so far been described in terms of Valid, Train and Non-landmark.

| dataset name | #(images) | #(class ids) | #(non-landmark images) |

|---|---|---|---|

| Train | 41,207 | 647 | 0 |

| Valid | 117,172 | 648 | 114,828 |

| Non-landmark | 114,828 | 1 | 114,828 |

The properties and relationships worth pointing out are:

-

The Train set contains only landmarks.

-

All landmarks in Valid are also there in Train.

-

The Valid also contains non-landmarks.

-

The Non-landmark contains only non-landmarks. It doesn't necessarily need to be those from Valid, just needs to be some non-landmarks.

These are preserved at test time, during submission:

| dataset name | #(images) | #(class ids) | #(non-landmark images) |

|---|---|---|---|

| Public Train | 1,580,470 | 81313 | 0 |

| Private Train | ~100,000 | \(C_{private}\) | 0 |

| Public Test | 10,345 | \(C_{public}\) + 1 | > 0 |

| Private Test | ~21,000 | \(C_{private}\) + 1 | > 0 |

| Non-landmark | 114,828 | 1 | 114,828 |

The Non-landmark set can be loaded as an external dataset to the inference notebook. The landmarks in Public Train covers all the landmarks in Public Test. The landmarks in Private Train covers all the landmarks in Private Test.

Valid is replaced with either Public Test or Private Test, while Train is replaced with Public Train or Private train. Therefore, the method of obtaining predictions by image retrieval described before can be applied here, too.

Team DP are Dieter and Psinger.