Introduction

In this Kaggle hosted by the Coleridge Initiative, the goal is to extract the mentions of dataset names from academic papers. For example, given the following piece of text:

In fact, organizations are now identifying digital skills or computer

literacy as one of their core values for employability (such as the

US Department of Education, the US Department of commerce, the OECD

Program for the International Assessment of Adult Competencies and the European Commission).the objective is to identify that "International Assessment of Adult Competencies and the European Commission" is the mention of a dataset. It's hoped that having something that can do this can help understand how public funded data are being used.

This is very much a natural language processing competition, and my first. Following a tutorial provided Kaggle user tungmphung, named entity recognition is the approach that I used to solve this problem, where each word is given one of three labels:

-

"B" — First word of a dataset mention

-

"I" — A word in a dataset mention that is not the first word

-

"O" — A word that is not part of a dataset mention

and models are trained on such labelled examples in a supervised setting. e.g. Part of the example text above is labelled as:

... the OECD Program for the International Assessment of Adult Competencies...

... O O O O O B I I I I ...Hugging Face

Hugging Face is a company that carries out natural language processing research with a particular focus on transformer models, and their open source packages are hugely popular. They appear to be the go-to packages if you want to do natural language processing using transformer models. transformers provides a large collection of transformer model architectures, such as BERT and GPT, and pre-trained parameters for a variety of tasks, like language modelling, token classification, and question answering. This is particularly useful as these are the results from hours of training on large language corpuses. tokenizers provides tools for tokenizing texts. Tokenizers are also models that you can train. datasets offers fast and compact objects for holding the data to be used by the models. The same information held in native Python types takes a lot more memory than when stored in datasets objects.

In tungmphung's tutorial, the training and inference are run by executing python scripts, but since I exclusively use Kaggle Notebooks, doing this was slightly awkward. Luckily, Hugging Face also provides a Trainer class, which works more seamlessly in a notebook environment, and there is a nice token classification (which appears to be the same thing as named entity recognition) tutorial available in the documentation.

Splitting text into words

In the example I started off with, text is split into words by simply splitting the string at the spaces and removing any non-alphanumeric characters, meaning a piece of text like this:

the high rate of youth unemployment in Lebanon (37%), one of the highest rates in the world.is turned into something like this:

['the', 'high', 'rate', 'of', 'youth', 'unemployment', 'in', 'Lebanon', '37',

'one', 'of', 'the', 'highest', 'rates', 'in', 'the', 'world']

where the punctuations, parenthesis, and units (in "37%", for example) are lost. To keep these, I used a what's called a pre-tokenizer to split the text into words instead. A more traditional tokenizer like Spacy effectively does the same thing, but here I used one that's available in Hugging Face, tokenizers.pre_tokenizers.BertPreTokenizer. This results in:

['the', 'high', 'rate', 'of', 'youth', 'unemployment', 'in', 'Lebanon',

'(', '37', '%', ')', ',', 'one', 'of', 'the', 'highest', 'rates', 'in', 'the',

'world', '.']I had hoped this would provide a richer structure and more clues for the model to learn, but in practice I did not observe any improvement in terms of the public leaderboard score.

Splitting paper into chunks

The models that I tried to use for this challenge are the BERT-like ones. Even though these architectures can, in principle, process inputs of any length (by setting max_position_embeddings), and hence texts of any length, in practice, the maximum input length is 512. Lengths greater than that require too much resources to train, and so 512 is the max length at which the pre-trained models are available.

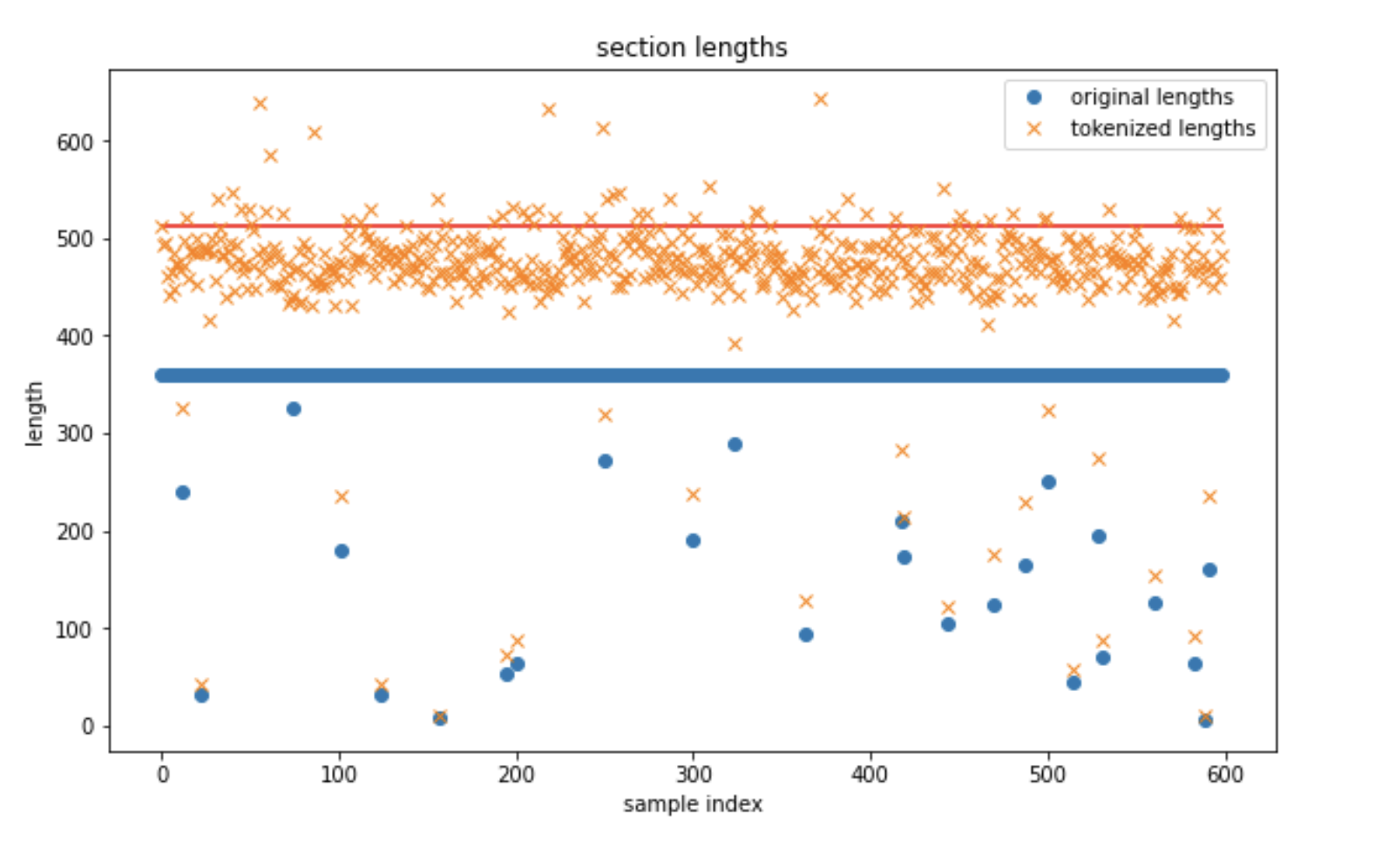

It's therefore necessary to break up the academic papers' texts into chunks in order to have them processed by the models. Taking into account that each word might be broken up into one or more subtokens, I took a sample of 1000 papers, generated chunks at some chosen length, and looked at the number of words and the number of tokens for each chunk.

By looking at such a plot while trying a couple of different chunk lengths, I chose a chunk length of 360. At this length, most of the tokenized chunks have a length that falls inside the maximum allowed 512.

If the model receives an input that exceeds this length, an error is raised, so it's common to tell the tokenizer to truncate the number of subtokens to 512, to avoid this error. But doing this, the words whose subtokens have been left out by the truncation are never seen by the model, which is not good, especially when it could contain a dataset mention. So, it's best to choose an appropriate chunk length before tokenizing.

A very small chunk length will guarantee that all tokenized chunks will not exceed the 512 length, but it'll mean that the model's capacity to learn sequences up to that length is under-utilised.

In the original example I started with, the model is given sentences, but I chose to simply give the model chunks of length of 360, each of which can span multiple sentences or sections in the paper. This should have allowed the models to learn longer dependencies.

At inference, I used smaller chunk lengths, between 200 and 340, to ensure that every single tokenized chunk has a length smaller than 512, because absolutely no text should be left out at inference.

On a related note, aside from the BERT models, there's the Longformer which is available pre-trained, capable of processing inputs up to a length of 4096, but this couldn't be trivially incorporated into the workflow I had been using, for the BERT family of models. e.g. its tokenizer is a python-based tokenizer, and there is not a word_ids method to the output, so there's no obvious way to map the subtokens to the input words. It's worth noting that the compute time of the Longformer scales linearly with input length, as opposed to quadratically for the Transformers.

Using special tokens

A typical academic paper consists of several sections. The first sections tend to be the abstract and the introduction. Perhaps dataset mentions tend to appear in certain sections and that it might be useful to tell the model which bits of the input correspond to section titles and which bits correspond to section texts.

To help do this, I inserted special tags into the original text to indicate the beginning and the end of section titles and section texts, namely: AAAsTITLE, ZZZsTITLE, AAAsTEXT, and ZZZsTEXT. For example, suppose the title of the introduction section of a paper is "Introduction", it becomes "AAAsTITLE Introduction ZZZsTITLE".

Now, chances are a word like "AAAsTEXT" is not in the tokenizer's vocabulary, so it will be split up into subtokens that are in the vocabulary under normal circumstances. It's therefore necessary to extend the current vocabulary to include these tags, and this can be done via the additional_special_tokens keyword argument when instantiating the tokenizer.

The model's embedding also need to be expanded to accommodate these new tokens. This is done by running model.resize_token_embeddings(len(tokenizer)) just after instantiation.

Undersampling of negative samples

Because dataset mentions don't appear every other paragraph in a paper, there are many chunks that don't contain a mention of a dataset. These chunks are negative samples, and there are many more of them than there are positive samples, chunks that do have a dataset mention. For training, I tried keeping all the negative samples, keeping only 20% of them, or keeping only those that contain words like "study" or "data". Due to the lack of training time and analysis, it's not clear which is the best, but it is observed that when all the negative samples are used, the metric improves most slowly.

Training

I tried distilbert-base-cased, xlm-roberta-base, and roberta-base. DistilBERT was used throughout the challenge as it's faster to test out ideas with it.

Even though RoBERTa and XLM-RoBERTa both give better f1 scores during training, they do not lead to a higher public leaderboard score. For these models, the per-device batch size is 8, whilst for DistilBERT, 20 can be used.

Literal matching improves the score from 0.418 to 0.498, using DistilBERT.

Ensembling all of them does lead to an improvement, from 0.499 to 0.526 on the public leaderboard.

Filtering predicted mentions

There was this step, in the original example I followed, where predicted dataset mentions that are too similar to each other are removed, keeping just one of them. However, I found that when all of them are kept, the public leaderboard score improved (from 0.499 to 0.526).

Conclusion

My final standing was only in the top 62%. Even though my position moved up quite a few places on the private leaderboard, my private leaderboard score was only 0.022! This likely means that I overfitted big time.

According to a comment from the winning team, they say that NER using BERTs is not a good approach for this problem, because somehow the BERTs are "too smart" that they tend to jump straight to what they think look like dataset mentions without learning about the context, leading to overfitting. What they did was to instead use GPT and beamsearch, such that the model is "forced" to predict if the next word in the text is the start of a dataset mention or the end of a dataset mention. Having said that, there are others who used BERT NER and finished really high, so it's probably more to do with problems with my implementation.

Anyway, I might return to this competition after I've had a look at some of the winners' solutions.